Journal Description

Entropy

Entropy

is an international and interdisciplinary peer-reviewed open access journal of entropy and information studies, published monthly online by MDPI. The International Society for the Study of Information (IS4SI) and Spanish Society of Biomedical Engineering (SEIB) are affiliated with Entropy and their members receive a discount on the article processing charge.

- Open Access— free for readers, with article processing charges (APC) paid by authors or their institutions.

- High Visibility: indexed within Scopus, SCIE (Web of Science), MathSciNet, Inspec, PubMed, PMC, Astrophysics Data System, and other databases.

- Journal Rank: JCR - Q2 (Physics, Multidisciplinary) / CiteScore - Q1 (Mathematical Physics)

- Rapid Publication: manuscripts are peer-reviewed and a first decision is provided to authors approximately 20.8 days after submission; acceptance to publication is undertaken in 2.9 days (median values for papers published in this journal in the second half of 2023).

- Recognition of Reviewers: reviewers who provide timely, thorough peer-review reports receive vouchers entitling them to a discount on the APC of their next publication in any MDPI journal, in appreciation of the work done.

- Testimonials: See what our editors and authors say about Entropy.

- Companion journals for Entropy include: Foundations, Thermo and MAKE.

Impact Factor:

2.7 (2022);

5-Year Impact Factor:

2.6 (2022)

Latest Articles

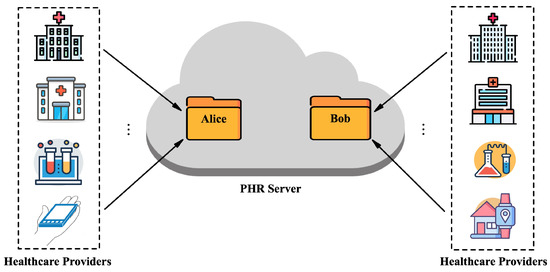

Identity-Based Matchmaking Encryption with Equality Test

Entropy 2024, 26(1), 74; https://doi.org/10.3390/e26010074 - 15 Jan 2024

Abstract

The identity-based encryption with equality test (IBEET) has become a hot research topic in cloud computing as it provides an equality test for ciphertexts generated under different identities while preserving the confidentiality. Subsequently, for the sake of the confidentiality and authenticity of the

[...] Read more.

The identity-based encryption with equality test (IBEET) has become a hot research topic in cloud computing as it provides an equality test for ciphertexts generated under different identities while preserving the confidentiality. Subsequently, for the sake of the confidentiality and authenticity of the data, the identity-based signcryption with equality test (IBSC-ET) has been put forward. Nevertheless, the existing schemes do not consider the anonymity of the sender and the receiver, which leads to the potential leakage of sensitive personal information. How to ensure confidentiality, authenticity, and anonymity in the IBEET setting remains a significant challenge. In this paper, we put forward the concept of the identity-based matchmaking encryption with equality test (IBME-ET) to address this issue. We formalized the system model, the definition, and the security models of the IBME-ET and, then, put forward a concrete scheme. Furthermore, our scheme was confirmed to be secure and practical by proving its security and evaluating its performance.

Full article

(This article belongs to the Special Issue Advances in Information Sciences and Applications II)

►

Show Figures

Open AccessArticle

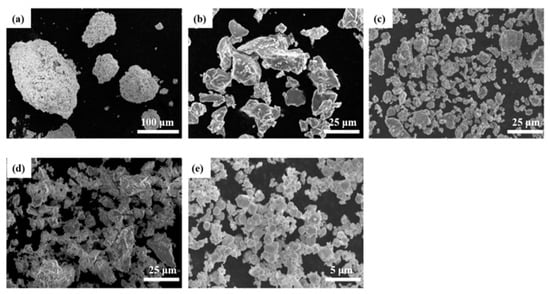

Study on Microstructure and High Temperature Stability of WTaVTiZrx Refractory High Entropy Alloy Prepared by Laser Cladding

Entropy 2024, 26(1), 73; https://doi.org/10.3390/e26010073 - 15 Jan 2024

Abstract

►▼

Show Figures

The extremely harsh environment of the high temperature plasma imposes strict requirements on the construction materials of the first wall in a fusion reactor. In this work, a refractory alloy system, WTaVTiZrx, with low activation and high entropy, was theoretically designed

[...] Read more.

The extremely harsh environment of the high temperature plasma imposes strict requirements on the construction materials of the first wall in a fusion reactor. In this work, a refractory alloy system, WTaVTiZrx, with low activation and high entropy, was theoretically designed based on semi-empirical formula and produced using a laser cladding method. The effects of Zr proportions on the metallographic microstructure, phase composition, and alloy chemistry of a high-entropy alloy cladding layer were investigated using a metallographic microscope, XRD (X-ray diffraction), SEM (scanning electron microscope), and EDS (energy dispersive spectrometer), respectively. The high-entropy alloys have a single-phase BCC structure, and the cladding layers exhibit a typical dendritic microstructure feature. The evolution of microstructure and mechanical properties of the high-entropy alloys, with respect to annealing temperature, was studied to reveal the performance stability of the alloy at a high temperature. The microstructure of the annealed samples at 900 °C for 5–10 h did not show significant changes compared to the as-cast samples, and the microhardness increased to 988.52 HV, which was higher than that of the as-cast samples (725.08 HV). When annealed at 1100 °C for 5 h, the microstructure remained unchanged, and the microhardness increased. However, after annealing for 10 h, black substances appeared in the microstructure, and the microhardness decreased, but it was still higher than the matrix. When annealed at 1200 °C for 5–10 h, the microhardness did not increase significantly compared to the as-cast samples, and after annealing for 10 h, the microhardness was even lower than that of the as-cast samples. The phase of the high entropy alloy did not change significantly after high-temperature annealing, indicating good phase stability at high temperatures. After annealing for 10 h, the microhardness was lower than that of the as-cast samples. The phase of the high entropy alloy remained unchanged after high-temperature annealing, demonstrating good phase stability at high temperatures.

Full article

Figure 1

Open AccessArticle

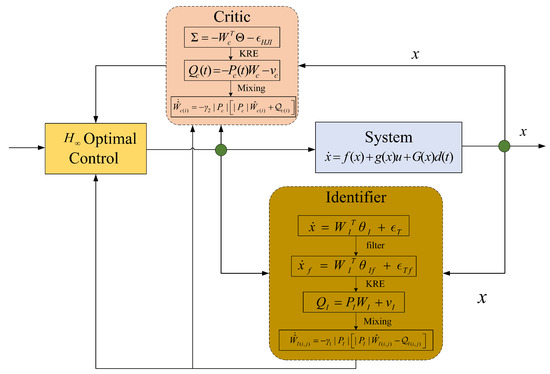

Optimal Robust Control of Nonlinear Systems with Unknown Dynamics via NN Learning with Relaxed Excitation

Entropy 2024, 26(1), 72; https://doi.org/10.3390/e26010072 - 14 Jan 2024

Abstract

This paper presents an adaptive learning structure based on neural networks (NNs) to solve the optimal robust control problem for nonlinear continuous-time systems with unknown dynamics and disturbances. First, a system identifier is introduced to approximate the unknown system matrices and disturbances with

[...] Read more.

This paper presents an adaptive learning structure based on neural networks (NNs) to solve the optimal robust control problem for nonlinear continuous-time systems with unknown dynamics and disturbances. First, a system identifier is introduced to approximate the unknown system matrices and disturbances with the help of NNs and parameter estimation techniques. To obtain the optimal solution of the optimal robust control problem, a critic learning control structure is proposed to compute the approximate controller. Unlike existing identifier-critic NNs learning control methods, novel adaptive tuning laws based on Kreisselmeier’s regressor extension and mixing technique are designed to estimate the unknown parameters of the two NNs under relaxed persistence of excitation conditions. Furthermore, theoretical analysis is also given to prove the significant relaxation of the proposed convergence conditions. Finally, effectiveness of the proposed learning approach is demonstrated via a simulation study.

Full article

(This article belongs to the Special Issue Intelligent Modeling and Control)

►▼

Show Figures

Figure 1

Open AccessArticle

Leakage Benchmarking for Universal Gate Sets

Entropy 2024, 26(1), 71; https://doi.org/10.3390/e26010071 - 13 Jan 2024

Abstract

Errors are common issues in quantum computing platforms, among which leakage is one of the most-challenging to address. This is because leakage, i.e., the loss of information stored in the computational subspace to undesired subspaces in a larger Hilbert space, is more difficult

[...] Read more.

Errors are common issues in quantum computing platforms, among which leakage is one of the most-challenging to address. This is because leakage, i.e., the loss of information stored in the computational subspace to undesired subspaces in a larger Hilbert space, is more difficult to detect and correct than errors that preserve the computational subspace. As a result, leakage presents a significant obstacle to the development of fault-tolerant quantum computation. In this paper, we propose an efficient and accurate benchmarking framework called leakage randomized benchmarking (LRB), for measuring leakage rates on multi-qubit quantum systems. Our approach is more insensitive to state preparation and measurement (SPAM) noise than existing leakage benchmarking protocols, requires fewer assumptions about the gate set itself, and can be used to benchmark multi-qubit leakages, which has not been achieved previously. We also extended the LRB protocol to an interleaved variant called interleaved LRB (iLRB), which can benchmark the average leakage rate of generic n-site quantum gates with reasonable noise assumptions. We demonstrate the iLRB protocol on benchmarking generic two-qubit gates realized using flux tuning and analyzed the behavior of iLRB under corresponding leakage models. Our numerical experiments showed good agreement with the theoretical estimations, indicating the feasibility of both the LRB and iLRB protocols.

Full article

(This article belongs to the Special Issue Quantum Computing in the NISQ Era)

►▼

Show Figures

Figure 1

Open AccessArticle

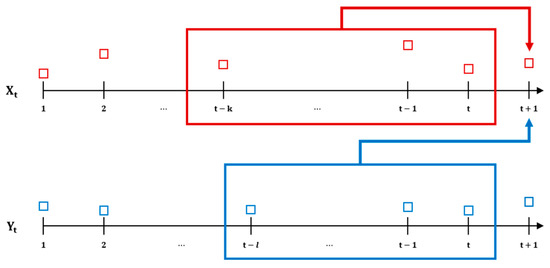

Enhancing Exchange-Traded Fund Price Predictions: Insights from Information-Theoretic Networks and Node Embeddings

by

and

Entropy 2024, 26(1), 70; https://doi.org/10.3390/e26010070 - 12 Jan 2024

Abstract

This study presents a novel approach to predicting price fluctuations for U.S. sector index ETFs. By leveraging information-theoretic measures like mutual information and transfer entropy, we constructed threshold networks highlighting nonlinear dependencies between log returns and trading volume rate changes. We derived centrality

[...] Read more.

This study presents a novel approach to predicting price fluctuations for U.S. sector index ETFs. By leveraging information-theoretic measures like mutual information and transfer entropy, we constructed threshold networks highlighting nonlinear dependencies between log returns and trading volume rate changes. We derived centrality measures and node embeddings from these networks, offering unique insights into the ETFs’ dynamics. By integrating these features into gradient-boosting algorithm-based models, we significantly enhanced the predictive accuracy. Our approach offers improved forecast performance for U.S. sector index futures and adds a layer of explainability to the existing literature.

Full article

(This article belongs to the Special Issue Information Theory-Based Approach to Portfolio Optimization)

►▼

Show Figures

Figure 1

Open AccessArticle

Electrodynamics of Superconductors: From Lorentz to Galilei at Zero Temperature

Entropy 2024, 26(1), 69; https://doi.org/10.3390/e26010069 - 12 Jan 2024

Abstract

We discuss the derivation of the electrodynamics of superconductors coupled to the electromagnetic field from a Lorentz-invariant bosonic model of Cooper pairs. Our results are obtained at zero temperature where, according to the third law of thermodynamics, the entropy of the system is

[...] Read more.

We discuss the derivation of the electrodynamics of superconductors coupled to the electromagnetic field from a Lorentz-invariant bosonic model of Cooper pairs. Our results are obtained at zero temperature where, according to the third law of thermodynamics, the entropy of the system is zero. In the nonrelativistic limit, we obtain a Galilei-invariant superconducting system, which differs with respect to the familiar Schrödinger-like one. From this point of view, there are similarities with the Pauli equation of fermions, which is derived from the Dirac equation in the nonrelativistic limit and has a spin-magnetic field term in contrast with the Schrödinger equation. One of the peculiar effects of our model is the decay of a static electric field inside a superconductor exactly with the London penetration length. In addition, our theory predicts a modified D’Alembert equation for the massive electromagnetic field also in the case of nonrelativistic superconducting matter. We emphasize the role of the Nambu–Goldstone phase field, which is crucial to obtain the collective modes of the superconducting matter field. In the special case of a nonrelativistic neutral superfluid, we find a gapless Bogoliubov-like spectrum, while for the charged superfluid we obtain a dispersion relation that is gapped by the plasma frequency.

Full article

(This article belongs to the Section Statistical Physics)

Open AccessArticle

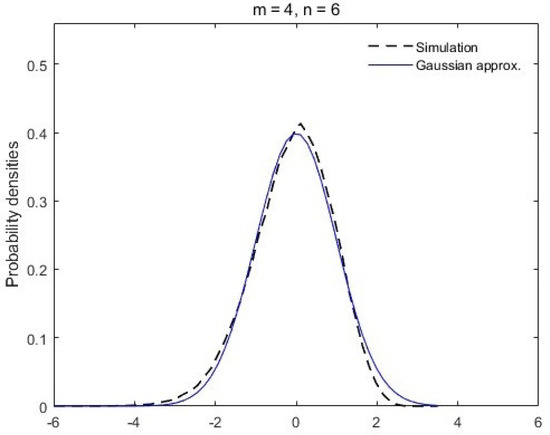

Square Root Statistics of Density Matrices and Their Applications

Entropy 2024, 26(1), 68; https://doi.org/10.3390/e26010068 - 12 Jan 2024

Abstract

To estimate the degree of quantum entanglement of random pure states, it is crucial to understand the statistical behavior of entanglement indicators such as the von Neumann entropy, quantum purity, and entanglement capacity. These entanglement metrics are functions of the spectrum of density

[...] Read more.

To estimate the degree of quantum entanglement of random pure states, it is crucial to understand the statistical behavior of entanglement indicators such as the von Neumann entropy, quantum purity, and entanglement capacity. These entanglement metrics are functions of the spectrum of density matrices, and their statistical behavior over different generic state ensembles have been intensively studied in the literature. As an alternative metric, in this work, we study the sum of the square root spectrum of density matrices, which is relevant to negativity and fidelity in quantum information processing. In particular, we derive the finite-size mean and variance formulas of the sum of the square root spectrum over the Bures–Hall ensemble, extending known results obtained recently over the Hilbert–Schmidt ensemble.

Full article

(This article belongs to the Section Statistical Physics)

►▼

Show Figures

Figure 1

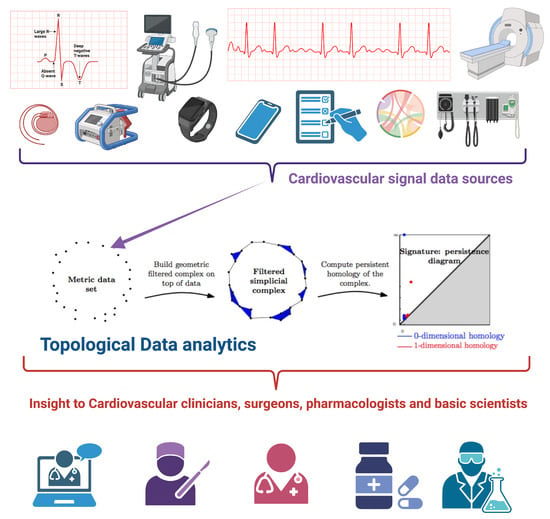

Open AccessReview

Topological Data Analysis in Cardiovascular Signals: An Overview

Entropy 2024, 26(1), 67; https://doi.org/10.3390/e26010067 - 12 Jan 2024

Abstract

Topological data analysis (TDA) is a recent approach for analyzing and interpreting complex data sets based on ideas a branch of mathematics called algebraic topology. TDA has proven useful to disentangle non-trivial data structures in a broad range of data analytics problems including

[...] Read more.

Topological data analysis (TDA) is a recent approach for analyzing and interpreting complex data sets based on ideas a branch of mathematics called algebraic topology. TDA has proven useful to disentangle non-trivial data structures in a broad range of data analytics problems including the study of cardiovascular signals. Here, we aim to provide an overview of the application of TDA to cardiovascular signals and its potential to enhance the understanding of cardiovascular diseases and their treatment in the form of a literature or narrative review. We first introduce the concept of TDA and its key techniques, including persistent homology, Mapper, and multidimensional scaling. We then discuss the use of TDA in analyzing various cardiovascular signals, including electrocardiography, photoplethysmography, and arterial stiffness. We also discuss the potential of TDA to improve the diagnosis and prognosis of cardiovascular diseases, as well as its limitations and challenges. Finally, we outline future directions for the use of TDA in cardiovascular signal analysis and its potential impact on clinical practice. Overall, TDA shows great promise as a powerful tool for the analysis of complex cardiovascular signals and may offer significant insights into the understanding and management of cardiovascular diseases.

Full article

(This article belongs to the Special Issue Nonlinear Dynamics in Cardiovascular Signals)

►▼

Show Figures

Figure 1

Open AccessArticle

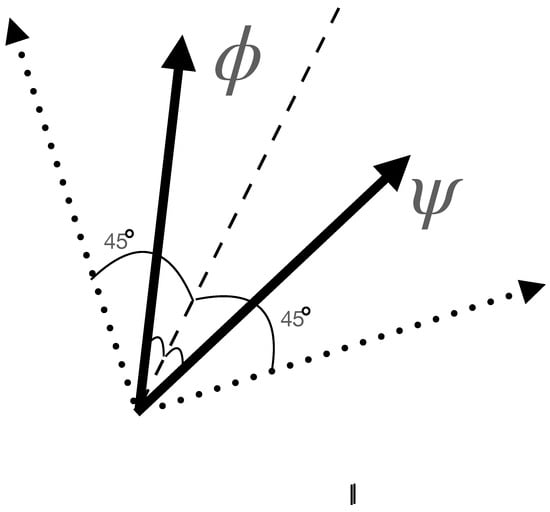

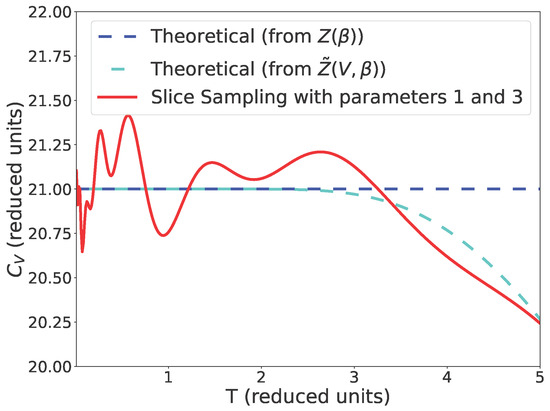

A Quantum Double-or-Nothing Game: An Application of the Kelly Criterion to Spins

Entropy 2024, 26(1), 66; https://doi.org/10.3390/e26010066 - 12 Jan 2024

Abstract

A quantum game is constructed from a sequence of independent and identically polarised spin-1/2 particles. Information about their possible polarisation is provided to a bettor, who can wager in successive double-or-nothing games on measurement outcomes. The choice at each stage is how much

[...] Read more.

A quantum game is constructed from a sequence of independent and identically polarised spin-1/2 particles. Information about their possible polarisation is provided to a bettor, who can wager in successive double-or-nothing games on measurement outcomes. The choice at each stage is how much to bet and in which direction to measure the individual particles. The portfolio’s growth rate rises as the measurements are progressively adjusted in response to the accumulated information. Wealth is amassed through astute betting. The optimal classical strategy is called the Kelly criterion and plays a fundamental role in portfolio theory and consequently quantitative finance. The optimal quantum strategy is determined numerically and shown to differ from the classical strategy. This paper contributes to the development of quantum finance, as aspects of portfolio optimisation are extended to the quantum realm. Intriguing trade-offs between information gain and portfolio growth are described.

Full article

(This article belongs to the Special Issue Quantum Correlations, Contextuality, and Quantum Nonlocality)

►▼

Show Figures

Figure 1

Open AccessArticle

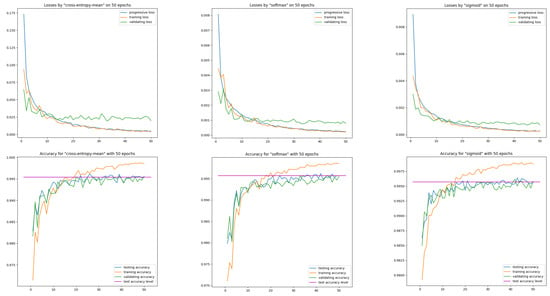

Cross Entropy in Deep Learning of Classifiers Is Unnecessary—ISBE Error Is All You Need

Entropy 2024, 26(1), 65; https://doi.org/10.3390/e26010065 - 12 Jan 2024

Abstract

In deep learning of classifiers, the cost function usually takes the form of a combination of SoftMax and CrossEntropy functions. The SoftMax unit transforms the scores predicted by the model network into assessments of the degree (probabilities) of an object’s membership to a

[...] Read more.

In deep learning of classifiers, the cost function usually takes the form of a combination of SoftMax and CrossEntropy functions. The SoftMax unit transforms the scores predicted by the model network into assessments of the degree (probabilities) of an object’s membership to a given class. On the other hand, CrossEntropy measures the divergence of this prediction from the distribution of target scores. This work introduces the ISBE functionality, justifying the thesis about the redundancy of cross-entropy computation in deep learning of classifiers. Not only can we omit the calculation of entropy, but also, during back-propagation, there is no need to direct the error to the normalization unit for its backward transformation. Instead, the error is sent directly to the model’s network. Using examples of perceptron and convolutional networks as classifiers of images from the MNIST collection, it is observed for ISBE that results are not degraded with SoftMax only but also with other activation functions such as Sigmoid, Tanh, or their hard variants HardSigmoid and HardTanh. Moreover, savings in the total number of operations were observed within the forward and backward stages. The article is addressed to all deep learning enthusiasts but primarily to programmers and students interested in the design of deep models. For example, it illustrates in code snippets possible ways to implement ISBE functionality but also formally proves that the SoftMax trick only applies to the class of dilated SoftMax functions with relocations.

Full article

(This article belongs to the Special Issue Entropy in Machine Learning Applications)

►▼

Show Figures

Figure 1

Open AccessArticle

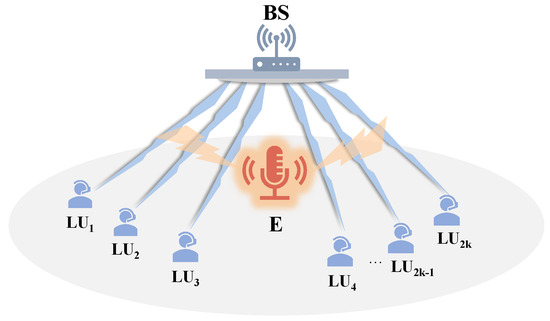

Secure User Pairing and Power Allocation for Downlink Non-Orthogonal Multiple Access against External Eavesdropping

Entropy 2024, 26(1), 64; https://doi.org/10.3390/e26010064 - 11 Jan 2024

Abstract

We propose a secure user pairing (UP) and power allocation (PA) strategy for a downlink Non-Orthogonal Multiple Access (NOMA) system when there exists an external eavesdropper. The secure transmission of data through the downlink is constructed to optimize both UP and PA. This

[...] Read more.

We propose a secure user pairing (UP) and power allocation (PA) strategy for a downlink Non-Orthogonal Multiple Access (NOMA) system when there exists an external eavesdropper. The secure transmission of data through the downlink is constructed to optimize both UP and PA. This optimization aims to maximize the achievable sum secrecy rate (ASSR) while adhering to a limit on the rate for each user. However, this poses a challenge as it involves a mixed integer nonlinear programming (MINLP) problem, which cannot be efficiently solved through direct search methods due to its complexity. To handle this gracefully, we first divide the original problem into two smaller issues, i.e., an optimal PA problem for two paired users and an optimal UP problem. Next, we obtain the closed-form optimal solution for PA between two users and UP in a simplified NOMA system involving four users. Finally, the result is extended to a general

(This article belongs to the Section Multidisciplinary Applications)

►▼

Show Figures

Figure 1

Open AccessArticle

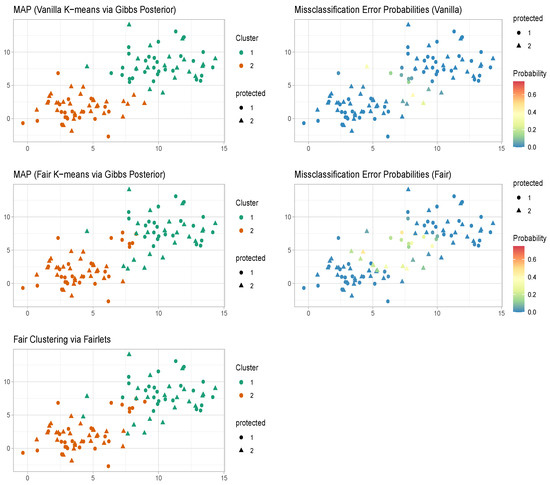

A Gibbs Posterior Framework for Fair Clustering

Entropy 2024, 26(1), 63; https://doi.org/10.3390/e26010063 - 11 Jan 2024

Abstract

The rise of machine learning-driven decision-making has sparked a growing emphasis on algorithmic fairness. Within the realm of clustering, the notion of balance is utilized as a criterion for attaining fairness, which characterizes a clustering mechanism as fair when the resulting clusters

[...] Read more.

The rise of machine learning-driven decision-making has sparked a growing emphasis on algorithmic fairness. Within the realm of clustering, the notion of balance is utilized as a criterion for attaining fairness, which characterizes a clustering mechanism as fair when the resulting clusters maintain a consistent proportion of observations representing individuals from distinct groups delineated by protected attributes. Building on this idea, the literature has rapidly incorporated a myriad of extensions, devising fair versions of the existing frequentist clustering algorithms, e.g., k-means, k-medioids, etc., that aim at minimizing specific loss functions. These approaches lack uncertainty quantification associated with the optimal clustering configuration and only provide clustering boundaries without quantifying the probabilities associated with each observation belonging to the different clusters. In this article, we intend to offer a novel probabilistic formulation of the fair clustering problem that facilitates valid uncertainty quantification even under mild model misspecifications, without incurring substantial computational overhead. Mixture model-based fair clustering frameworks facilitate automatic uncertainty quantification, but tend to showcase brittleness under model misspecification and involve significant computational challenges. To circumnavigate such issues, we propose a generalized Bayesian fair clustering framework that inherently enjoys decision-theoretic interpretation. Moreover, we devise efficient computational algorithms that crucially leverage techniques from the existing literature on optimal transport and clustering based on loss functions. The gain from the proposed technology is showcased via numerical experiments and real data examples.

Full article

(This article belongs to the Special Issue Probabilistic Models in Machine and Human Learning)

►▼

Show Figures

Figure 1

Open AccessArticle

Linear Bayesian Estimation of Misrecorded Poisson Distribution

Entropy 2024, 26(1), 62; https://doi.org/10.3390/e26010062 - 11 Jan 2024

Abstract

Parameter estimation is an important component of statistical inference, and how to improve the accuracy of parameter estimation is a key issue in research. This paper proposes a linear Bayesian estimation for estimating parameters in a misrecorded Poisson distribution. The linear Bayesian estimation

[...] Read more.

Parameter estimation is an important component of statistical inference, and how to improve the accuracy of parameter estimation is a key issue in research. This paper proposes a linear Bayesian estimation for estimating parameters in a misrecorded Poisson distribution. The linear Bayesian estimation method not only adopts prior information but also avoids the cumbersome calculation of posterior expectations. On the premise of ensuring the accuracy and stability of computational results, we derived the explicit solution of the linear Bayesian estimation. Its superiority was verified through numerical simulations and illustrative examples.

Full article

(This article belongs to the Special Issue Bayesianism)

Open AccessArticle

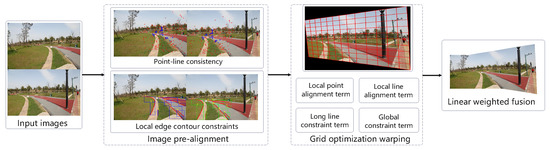

Research on Image Stitching Algorithm Based on Point-Line Consistency and Local Edge Feature Constraints

Entropy 2024, 26(1), 61; https://doi.org/10.3390/e26010061 - 10 Jan 2024

Abstract

Image stitching aims to synthesize a wider and more informative whole image, which has been widely used in various fields. This study focuses on improving the accuracy of image mosaic and proposes an image mosaic method based on local edge contour matching constraints.

[...] Read more.

Image stitching aims to synthesize a wider and more informative whole image, which has been widely used in various fields. This study focuses on improving the accuracy of image mosaic and proposes an image mosaic method based on local edge contour matching constraints. Because the accuracy and quantity of feature matching have a direct influence on the stitching result, it often leads to wrong image warpage model estimation when feature points are difficult to detect and match errors are easy to occur. To address this issue, the geometric invariance is used to expand the number of feature matching points, thus enriching the matching information. Based on Canny edge detection, significant local edge contour features are constructed through operations such as structure separation and edge contour merging to improve the image registration effect. The method also introduces the spatial variation warping method to ensure the local alignment of the overlapping area, maintains the line structure in the image without bending by the constraints of short and long lines, and eliminates the distortion of the non-overlapping area by the global line-guided warping method. The method proposed in this paper is compared with other research through experimental comparisons on multiple datasets, and excellent stitching results are obtained.

Full article

(This article belongs to the Special Issue Advances in Computer Recognition, Image Processing and Communications, Selected Papers from CORES 2023 and IP&C 2023)

►▼

Show Figures

Figure 1

Open AccessArticle

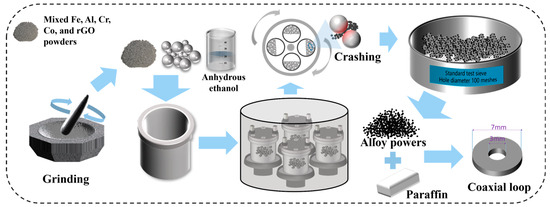

Effect of Reduced Graphene Oxide on Microwave Absorbing Properties of Al1.5Co4Fe2Cr High-Entropy Alloys

Entropy 2024, 26(1), 60; https://doi.org/10.3390/e26010060 - 10 Jan 2024

Abstract

►▼

Show Figures

The microwave absorption performance of high-entropy alloys (HEAs) can be improved by reducing the reflection coefficient of electromagnetic waves and broadening the absorption frequency band. The present work prepared flaky irregular-shaped Al1.5Co4Fe2Cr and Al1.5Co4

[...] Read more.

The microwave absorption performance of high-entropy alloys (HEAs) can be improved by reducing the reflection coefficient of electromagnetic waves and broadening the absorption frequency band. The present work prepared flaky irregular-shaped Al1.5Co4Fe2Cr and Al1.5Co4Fe2Cr@rGO alloy powders by mechanical alloying (MA) at different rotational speeds. It was found that the addition of trace amounts of reduced graphene oxide (rGO) had a favorable effect on the impedance matching, reflection loss (RL), and effective absorbing bandwidth (EAB) of the Al1.5Co4Fe2Cr@rGO HEA composite powders. The EAB of the alloy powders prepared at 300 rpm increased from 2.58 GHz to 4.62 GHz with the additive, and the RL increased by 2.56 dB. The results showed that the presence of rGO modified the complex dielectric constant of HEA powders, thereby enhancing their dielectric loss capability. Additionally, the presence of lamellar rGO intensified the interfacial reflections within the absorber, facilitating the dissipation of electromagnetic waves. The effect of the ball milling speed on the defect concentration of the alloy powders also affected its wave absorption performance. The samples prepared at 350 rpm had the best wave absorption performance, with an RL of −16.23 and −17.28 dB for a thickness of 1.6 mm and EAB of 5.77 GHz and 5.43 GHz, respectively.

Full article

Figure 1

Open AccessEditorial

Complexity and Statistical Physics Approaches to Earthquakes

Entropy 2024, 26(1), 59; https://doi.org/10.3390/e26010059 - 10 Jan 2024

Abstract

This Special Issue of Entropy, “Complexity and Statistical Physics Approaches to Earthquakes”, sees the successful publication of 11 original scientific articles [...]

Full article

(This article belongs to the Special Issue Complexity and Statistical Physics Approaches to Earthquakes)

Open AccessArticle

Objective Priors for Invariant e-Values in the Presence of Nuisance Parameters

by

and

Entropy 2024, 26(1), 58; https://doi.org/10.3390/e26010058 - 09 Jan 2024

Abstract

This paper aims to contribute to refining the e-values for testing precise hypotheses, especially when dealing with nuisance parameters, leveraging the effectiveness of asymptotic expansions of the posterior. The proposed approach offers the advantage of bypassing the need for elicitation of priors

[...] Read more.

This paper aims to contribute to refining the e-values for testing precise hypotheses, especially when dealing with nuisance parameters, leveraging the effectiveness of asymptotic expansions of the posterior. The proposed approach offers the advantage of bypassing the need for elicitation of priors and reference functions for the nuisance parameters and the multidimensional integration step. For this purpose, starting from a Laplace approximation, a posterior distribution for the parameter of interest is only considered and then a suitable objective matching prior is introduced, ensuring that the posterior mode aligns with an equivariant frequentist estimator. Consequently, both Highest Probability Density credible sets and the e-value remain invariant. Some targeted and challenging examples are discussed.

Full article

(This article belongs to the Special Issue Bayesianism)

►▼

Show Figures

Figure 1

Open AccessArticle

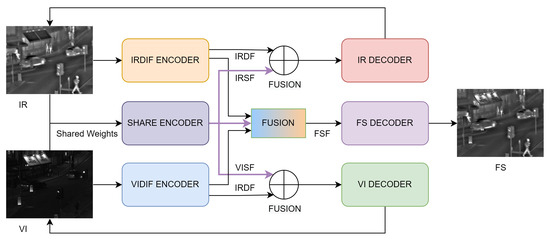

SharDif: Sharing and Differential Learning for Image Fusion

by

and

Entropy 2024, 26(1), 57; https://doi.org/10.3390/e26010057 - 09 Jan 2024

Abstract

Image fusion is the generation of an informative image that contains complementary information from the original sensor images, such as texture details and attentional targets. Existing methods have designed a variety of feature extraction algorithms and fusion strategies to achieve image fusion. However,

[...] Read more.

Image fusion is the generation of an informative image that contains complementary information from the original sensor images, such as texture details and attentional targets. Existing methods have designed a variety of feature extraction algorithms and fusion strategies to achieve image fusion. However, these methods ignore the extraction of common features in the original multi-source images. The point of view proposed in this paper is that image fusion is to retain, as much as possible, the useful shared features and complementary differential features of the original multi-source images. Shared and differential learning methods for infrared and visible light image fusion are proposed. An encoder with shared weights is used to extract shared common features contained in infrared and visible light images, and the other two encoder blocks are used to extract differential features of infrared images and visible light images, respectively. Effective learning of shared and differential features is achieved through weight sharing and loss functions. Then, the fusion of shared features and differential features is achieved via a weighted fusion strategy based on an entropy-weighted attention mechanism. The experimental results demonstrate the effectiveness of the proposed model with its algorithm. Compared with the-state-of-the-art methods, the significant advantage of the proposed method is that it retains the structural information of the original image and has better fusion accuracy and visual perception effect.

Full article

(This article belongs to the Section Signal and Data Analysis)

►▼

Show Figures

Figure 1

Open AccessArticle

Daily Streamflow of Argentine Rivers Analysis Using Information Theory Quantifiers

Entropy 2024, 26(1), 56; https://doi.org/10.3390/e26010056 - 09 Jan 2024

Abstract

This paper analyzes the temporal evolution of streamflow for different rivers in Argentina based on information quantifiers such as statistical complexity and permutation entropy. The main objective is to identify key details of the dynamics of the analyzed time series to differentiate the

[...] Read more.

This paper analyzes the temporal evolution of streamflow for different rivers in Argentina based on information quantifiers such as statistical complexity and permutation entropy. The main objective is to identify key details of the dynamics of the analyzed time series to differentiate the degrees of randomness and chaos. The permutation entropy is used with the probability distribution of ordinal patterns and the Jensen–Shannon divergence to calculate the disequilibrium and the statistical complexity. Daily streamflow series at different river stations were analyzed to classify the different hydrological systems. The complexity-entropy causality plane (CECP) and the representation of the Shannon entropy and Fisher information measure (FIM) show that the daily discharge series could be approximately represented with Gaussian noise, but the variances highlight the difficulty of modeling a series of natural phenomena. An analysis of stations downstream from the Yacyretá dam shows that the operation affects the randomness of the daily discharge series at hydrometric stations near the dam. When the station is further downstream, however, this effect is attenuated. Furthermore, the size of the basin plays a relevant role in modulating the process. Large catchments have smaller values for entropy, and the signal is less noisy due to integration over larger time scales. In contrast, small and mountainous basins present a rapid response that influences the behavior of daily discharge while presenting a higher entropy and lower complexity. The results obtained in the present study characterize the behavior of the daily discharge series in Argentine rivers and provide key information for hydrological modeling.

Full article

(This article belongs to the Special Issue Selected Featured Papers from Entropy Editorial Board Members)

►▼

Show Figures

Figure 1

Open AccessCorrection

Correction: Maillard et al. Assessing Search and Unsupervised Clustering Algorithms in Nested Sampling. Entropy 2023, 25, 347

Entropy 2024, 26(1), 55; https://doi.org/10.3390/e26010055 - 09 Jan 2024

Abstract

There was an error in the original publication [...]

Full article

(This article belongs to the Special Issue MaxEnt 2022—the 41st International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering)

►▼

Show Figures

Figure 1

Journal Menu

► ▼ Journal Menu-

- Entropy Home

- Aims & Scope

- Editorial Board

- Reviewer Board

- Topical Advisory Panel

- Video Exhibition

- Instructions for Authors

- Special Issues

- Topics

- Sections & Collections

- Article Processing Charge

- Indexing & Archiving

- Editor’s Choice Articles

- Most Cited & Viewed

- Journal Statistics

- Journal History

- Journal Awards

- Society Collaborations

- Editorial Office

Journal Browser

► ▼ Journal Browser-

arrow_forward_ios

Forthcoming issue

arrow_forward_ios Current issue - Vol. 26 (2024)

- Vol. 25 (2023)

- Vol. 24 (2022)

- Vol. 23 (2021)

- Vol. 22 (2020)

- Vol. 21 (2019)

- Vol. 20 (2018)

- Vol. 19 (2017)

- Vol. 18 (2016)

- Vol. 17 (2015)

- Vol. 16 (2014)

- Vol. 15 (2013)

- Vol. 14 (2012)

- Vol. 13 (2011)

- Vol. 12 (2010)

- Vol. 11 (2009)

- Vol. 10 (2008)

- Vol. 9 (2007)

- Vol. 8 (2006)

- Vol. 7 (2005)

- Vol. 6 (2004)

- Vol. 5 (2003)

- Vol. 4 (2002)

- Vol. 3 (2001)

- Vol. 2 (2000)

- Vol. 1 (1999)

Highly Accessed Articles

Latest Books

E-Mail Alert

News

Topics

Topic in

Entropy, Energies, Processes, Water, JMSE

Advances in Efficiency, Cost, Optimization, Simulation and Environmental Impact of Energy Systems from ECOS 2023

Topic Editors: Pedro Cabrera, Enrique Rosales Asensio, María José Pérez Molina, Beatriz Del Río-Gamero, Noemi Melián Martel, Dunia Esther Santiago García, Alejandro Ramos Martín, Néstor Florido Suárez, Carlos Alberto Mendieta Pino, Federico León ZerpaDeadline: 1 February 2024

Topic in

Crystals, Entropy, Materials, Metals, Nanomaterials

High-Performance Multicomponent Alloys

Topic Editors: Xusheng Yang, Honghui Wu, Wangzhong MuDeadline: 29 February 2024

Topic in

Entropy, Photonics, Physics, Plasma, Universe, Fractal Fract, Condensed Matter

Applications of Photonics, Laser, Plasma and Radiation Physics

Topic Editors: Viorel-Puiu Paun, Eugen Radu, Maricel Agop, Mircea OlteanuDeadline: 30 March 2024

Topic in

Sensors, J. Imaging, Electronics, Applied Sciences, Entropy, Digital, J. Intell.

Advances in Perceptual Quality Assessment of User Generated Contents

Topic Editors: Guangtao Zhai, Xiongkuo Min, Menghan Hu, Wei ZhouDeadline: 31 March 2024

Conferences

Special Issues

Special Issue in

Entropy

Information Theory and Uncertainty Analysis in Industrial and Service Systems

Guest Editors: Irad E. Ben-Gal, Parteek Kumar Bhatia, Eugene KaganDeadline: 20 January 2024

Special Issue in

Entropy

Quantum Models of Cognition and Decision-Making II

Guest Editors: Andrei Khrennikov, Fabio BagarelloDeadline: 31 January 2024

Special Issue in

Entropy

Information Theory in Emerging Wireless Communication Systems and Networks

Guest Editor: Erdem KoyuncuDeadline: 15 February 2024

Special Issue in

Entropy

Non-equilibrium Phase Transitions

Guest Editors: Carlos E. Fiore, Silvio C. FerreiraDeadline: 29 February 2024

Topical Collections

Topical Collection in

Entropy

Algorithmic Information Dynamics: A Computational Approach to Causality from Cells to Networks

Collection Editors: Hector Zenil, Felipe Abrahão

Topical Collection in

Entropy

Wavelets, Fractals and Information Theory

Collection Editor: Carlo Cattani

Topical Collection in

Entropy

Entropy in Image Analysis

Collection Editor: Amelia Carolina Sparavigna